By Karolina Stanislawska

Recently released GenCast model has made its way into traditional media. While it’s not the first successful AI model to emerge in the recent years, it stands out for its extraordinary accuracy and its innovative approach to ensemble forecasting that leverages the architecture of its underlying network—a conditional diffusion model.

Machine learning modelling gives us access to a wide range of network architectures. The architectures of ML-based weather models often get inspired by a similar problem existing in the “mainstream” ML/AI—for example, ClimaX was based on Vision Transformer, commonly used for processing images, and GraphCast leverages graph neural networks because of the structural similarity between grid edges and graph nodes.

GenCast, on the other hand, employs a conditional diffusion model. This approach is reminiscent of another ML weather model called CorrDiff (developed by Nvidia), which focuses on downscaling forecasts from low to high resolution. However, GenCast uses the diffusion model differently—predicting future weather state (at time T+1) based on two prior time steps (T and T-1).

Ensemble forecasts

Ensemble forecasting in Numerical Weather Prediction emerged from the recognition that a single deterministic forecast often fails to capture the uncertainty inherent in weather predictions.

The concept of sensitivity to initial conditions, illustrated by the “butterfly effect” in chaos theory, highlights how small uncertainties in the starting state of the atmosphere can escalate and lead to divergent outcomes—especially over longer time horizons. Combined with the inherent limitations of numerical calculations and floating-point representation, these sensitivities can cause numerical forecasts—starting from, one would say, the same initial conditions—to deviate significantly.

To address this, meteorologists run a set of forecasts instead of relying on just one. This set is called an ensemble forecast, and each individual forecast is referred to as an ensemble member. Ensemble members are typically initialized by either perturbing the initial conditions or running the model with a slightly modified configuration (e.g. scheme representing microphisics). This approach allows the ensemble to explore a range of possible outcomes, providing a more comprehensive picture of forecast uncertainty.

Diffusion models – from random noise to a cat wearing glasses

Diffusion models are one of the most fascinating architectures I’ve encountered. Their elegance and ingenuity deserve a dedicated post to explain the concept in detail. It is these models that serve as the foundation for text-to-image generators.

The core idea is that these networks learn to associate a given condition (most often a text prompt) with an image. The process begins with random noise—similar to the “snow” picture on old TVs when no signal was detected in the 90s—combined with the condition. In the case of image generation, the condition is the text describing the desired content. By iteratively refining the noise, the model transforms it into a coherent image that matches the description, enabling us to create visuals where anything is possible.

Mixing them together

Now, how does a generative model predict a weather state? In this context, the equivalent of the prompt “a cat sitting on an office chair in front of a laptop, wearing glasses” would be weather at times T and T-1. The equivalent of the generated cat image is the predicted weather at time T+1.

The GenCast model uses this process to generate a single forecast for the next timestamp. This procedure is then repeated iteratively (in machine learning terms, autoregressively) to produce forecasts at T+2, T+3 and beyond.

And where does the ensemble come into play? It lies in the noisy TV image! Recall how that “image” was constantly in motion, with black and white dots randomly changing locations. The GenCast ensemble is created by running the model multiple times with different random noise images as input. This turns out to be able to provide enough randomness for the forecasts to go each to a slightly different direction. Just like your image generator shows you four distinct cat images from your prompt!

Impressive scores of the weather-cat herd

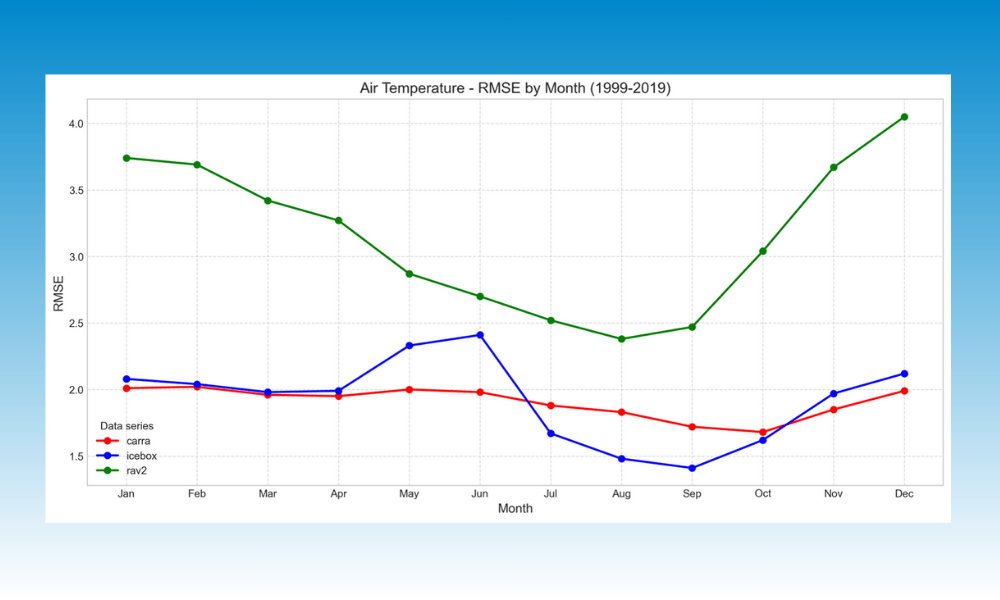

The GenCast model is extraordinary for scoring better than ECMWF’s ensemble model, the gold standard in ensemble forecasting. While it is still constrained by the 0.25-degree resolution of its underlying training data, it represents a promising step forward in the development of efficient and accurate ML-based weather models.

What will come next?

Originally posted at Karolina Stanislawska’s blog AI Weather Hub. Follow the blog for more stories!